AUTO PATROL ROBOT

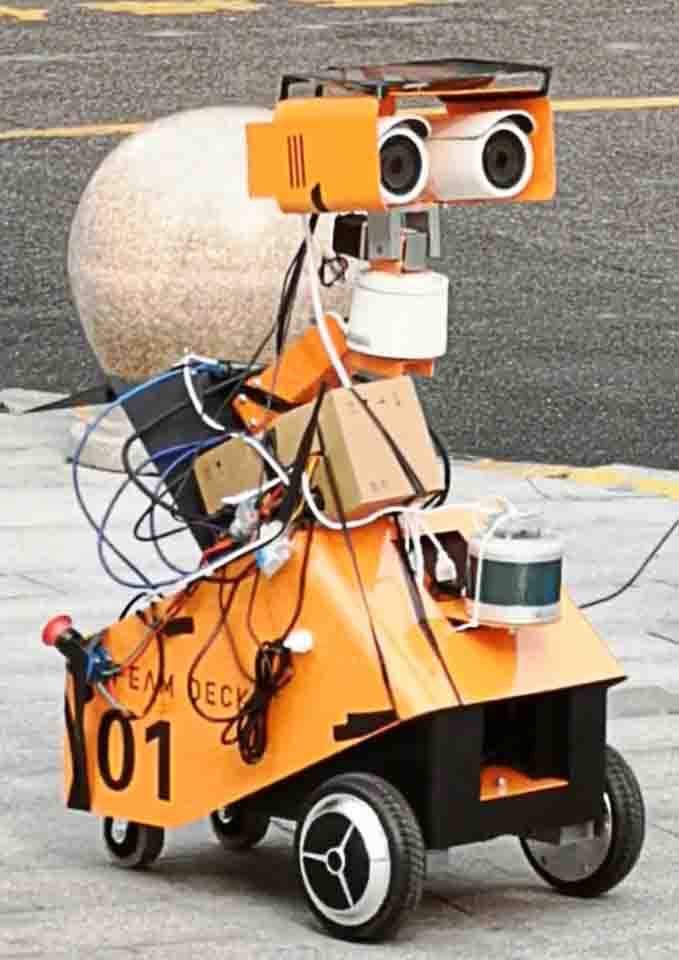

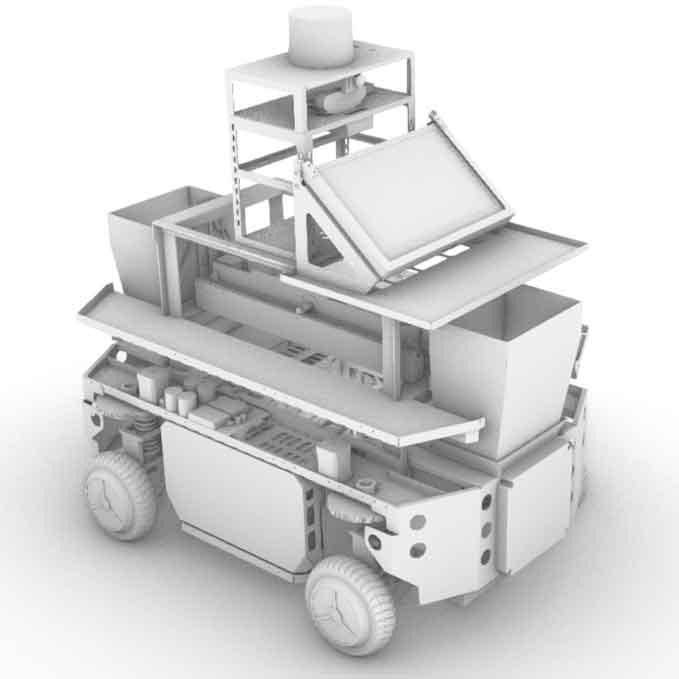

A compact quadruped robot (0.9m×0.6m×1.2m) designed for autonomous indoor/outdoor patrol, anomaly detection, and human interaction in city parks. As the core algorithm developer, I designed and implemented the perception backbone - covering mapping, localization, and multi-sensor fusion - and led the development of autonomous navigation and behavioral AI modules.

Sensing Navigation Perception InteractionV1 | Sensors Connection

- Date: 2023 NOV-2024 MAR

- Type: Research Project

- Role: Algorithm Developer

- Software: ROS1 (Ubuntu), Autoware, Docker

- Hardware: Jetson Orin NX, Laser Radar, Imu, Stereo Camera, GNSS.

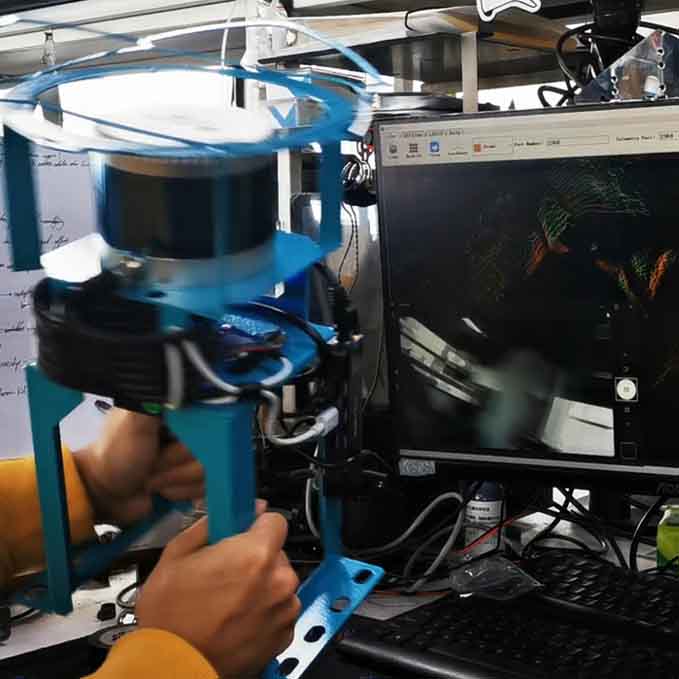

- Description: This phase focused on building the perception backbone through sensor mounting, calibration, data synchronization, and system integration. I tested multiple Autoware versions and arrived at Dockerized ai-1.14 for stability.

Perception Layer & Integration

Beyond hardware setup, my main work was ensuring that LiDAR, stereo camera, IMU, and GNSS data could be fused together as a perception system. These tasks included alignment, real-time ROS node setup, and validation of fusion outputs to support accurate localization.

Challenges

As the sole developer initiating the project, I faced steep learning curves in ROS architecture and Autoware's modular dependencies. Initial trials with Autoware Universe and Carla simulation suffered from environment and build issues. I pivoted to Autoware.ai-1.14 in Docker for stability. Despite no prior background in autonomous systems and ROS architecture, I led the transition from simulation to physical perception tests, solving hardware dropouts and timing errors under tight deadlines.

V3 | Multi-Modal Perception

- Date: 2024 NOV -

- Type: Grounded Project

- Role: Algorithm Developer

- SoftWare: YOLO, Large Model, MQTT, ROS1, etc.

- HardWare: Jeston Orin NX, RGB Camera, Lidar, Micro Array, etc.

- Description: I set up a complete perception backbone that fuses multi-camera, LiDAR, sound, and auxiliary sensors, feeding both gast YOLO models and large multi-modal/ASR models for detection.

Perception Backbone

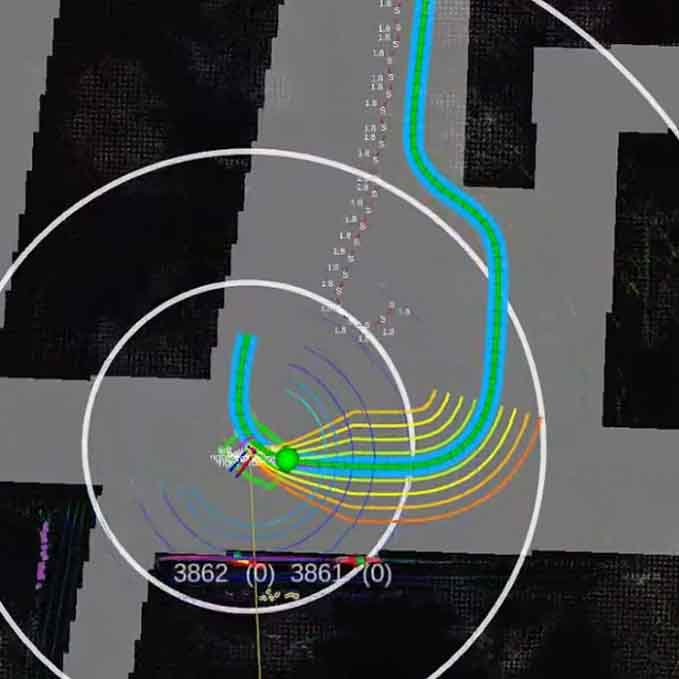

The perception backbone unifies inputs from multi-camera, LiDAR, IMU, microphone array, and other accessories into a single processing pipeline. It outputs three major streams: 1. ROI detection and tracking - from fast YOLO modules. 2. High-level anomaly and context interpretation - from Large Models and ASR. 3. Spatial perception - from LiDAR-camera fusion.

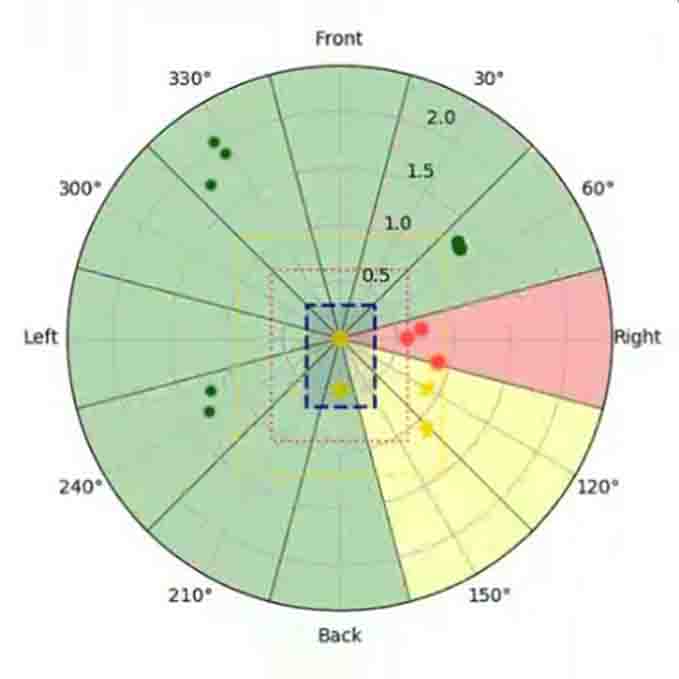

Dual-Rate Detection: A two-layer detection strategy is implemented for balancing response speed and contextual precision. Locally on the vehicle, a self-trained YOLO model runs at high rate, detecting unwanted behaviors such as smoking or walking dogs in real time. In parellel, selected frames are sent at low rate to a multi-modal model deployed on company-server, which delivers higher-precision judgements on subtle cases like stepping on grass or fighting.

Voice Interaction: Live audio streams are continuously sent to a large language model, where detected words are classified by an agent into commands, casual dialogue, or emergency cases such as tourists asking for help. Each category triggers corresponding strategies, from executing control actions to issuing alerts. On the hardware layer, a dual-microphone array fileters input from the front direction, while speakers are positioned on the vehicle's side to prevent feedback from its own output.

LiDAR-Camera Fusion: LiDAR points are projected into the camera coordinate system, filtered by YOLO ROIs(Region of interest), and then clustered using a density-based method. The most representative cluster is selected as the final result, providing depth and localization for detected objects.

V4 | Interaction - Voice, Engagement & Control

- Date: 2024 NOV -

- Type: Grounded Project

- Role: Algorithm Developer

- SoftWare: YOLO, Large Model, MQTT, ROS1, etc..

- HardWare: Jeston Orin NX, RGB Camera, Lidar, Micro Array, etc..

- Description: The interaction layer translates perception outputs into real-time engagement strategies, handling voice commands, casual dialogue, and emergency cases wile coordinating user-facing responses, vehicle movements, and back-end information.

Voice Strategies: After audio is converted to text by ASR, the recognized text is processed by an LLM combined with a knowledge base. This layer classifies the input into three categories: casual dialogue, control commands, or emergencies. Casual dialogue prompts lightweight responses to maintain engagement; commands such as navigation requests are translated into vehicle actions; and emergency cases, like tourists asking for help, immediately trigger protocols to notify police or park stuff.

Visual Strategies: Visual perception outputs are also mapped into interaction strategies. When unwanted behaviors such as smoking or walking dogs are detected, the robot sends a signal to the backend, issues a voice warning to the individual involved, and directs the navigation system to keep following the case. In more serious scenarios - such as detecting a person who has fallen or groups engaged in fighting - the system sends emergency calls to the backend to alert part guards for assistance.

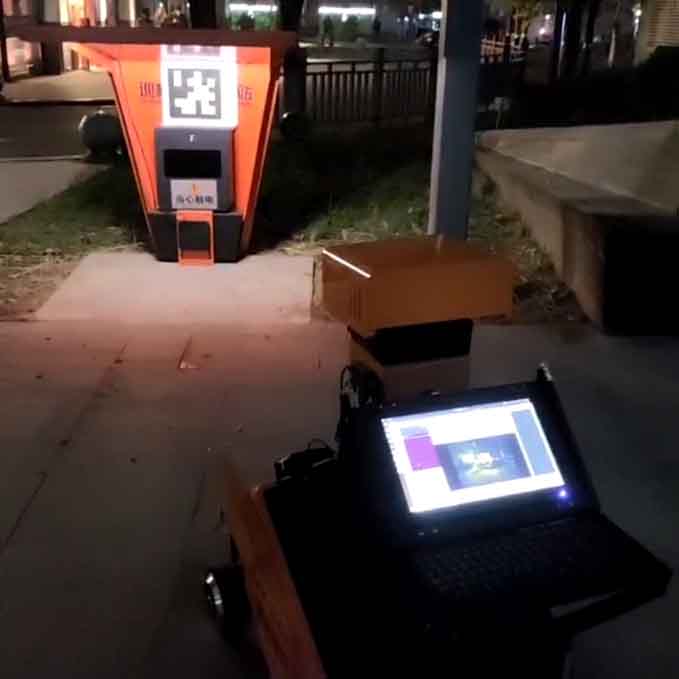

Field Testing & System Stabilization

The final user interaction and platform management experience were shaped by real-world constraints. Nearly half of the development cycle was dedicated to field testing, where I refined vehicle stability, obstacle avoidance, voice responsiveness, and real-time streaming. Early deployments often failed due to issues such as loose cables, network latency, and hardware faults. Through months of iterative debugging and optimization, these problems were systematically resolved, resulting in a stable and reliable system for sustained outdoor operation.

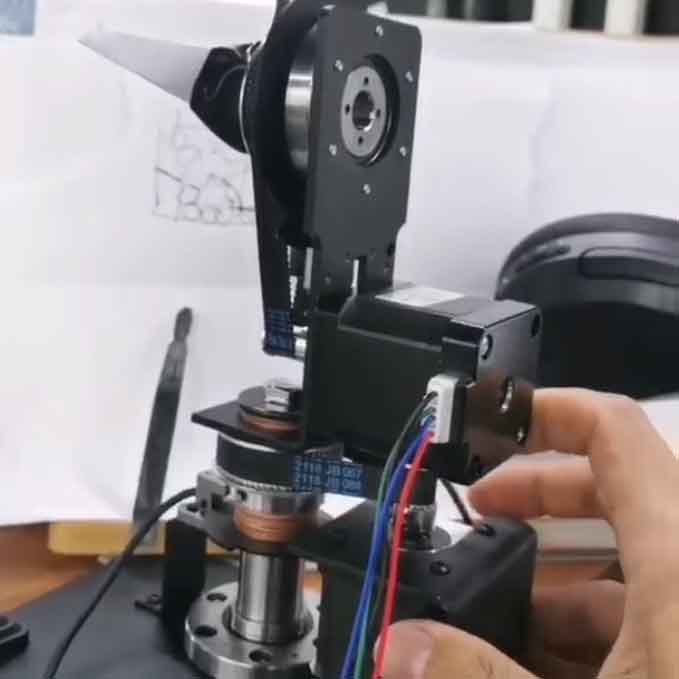

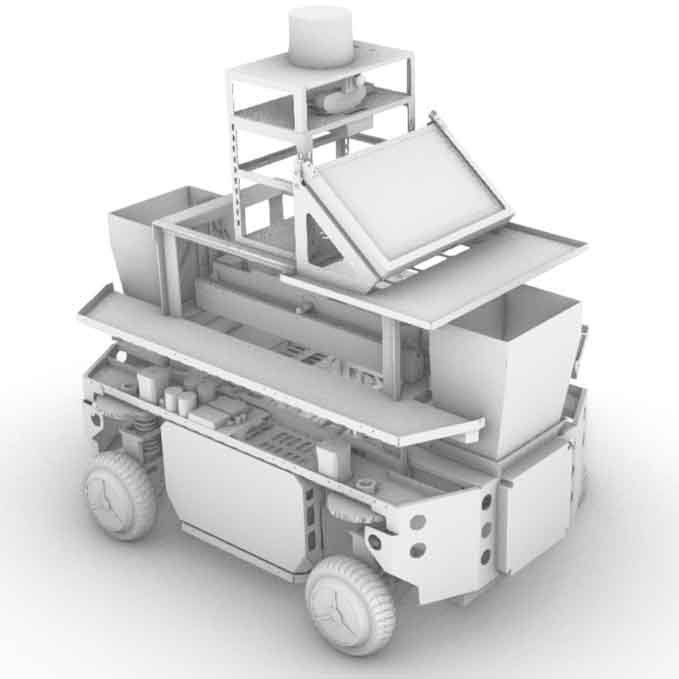

V5 | Four Wheel Drive Vehicle

- Date: 2024 Oct -

- Type: Research Project

- Role: Mechanical & Embedded Engineer

- SoftWare: Rhinoceros

- HardWare: 4WD Suspension Steering System

- Description: A compact 4WD platform I designed for flexible algorithm and sensor validation, small enough for office testing yet capable of supporting advanced navigation trials and various sensors.

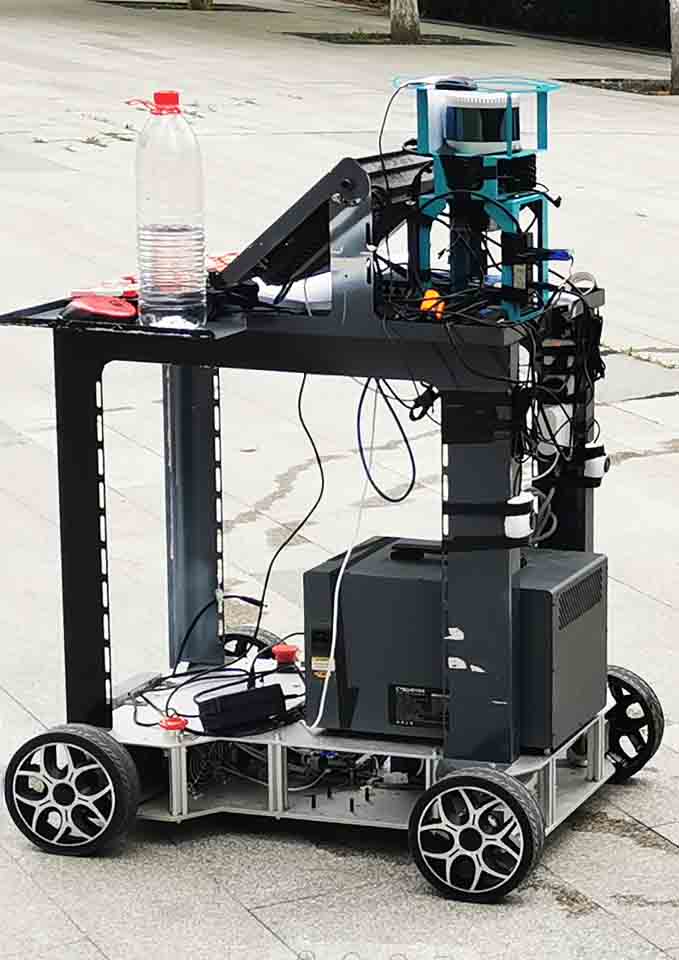

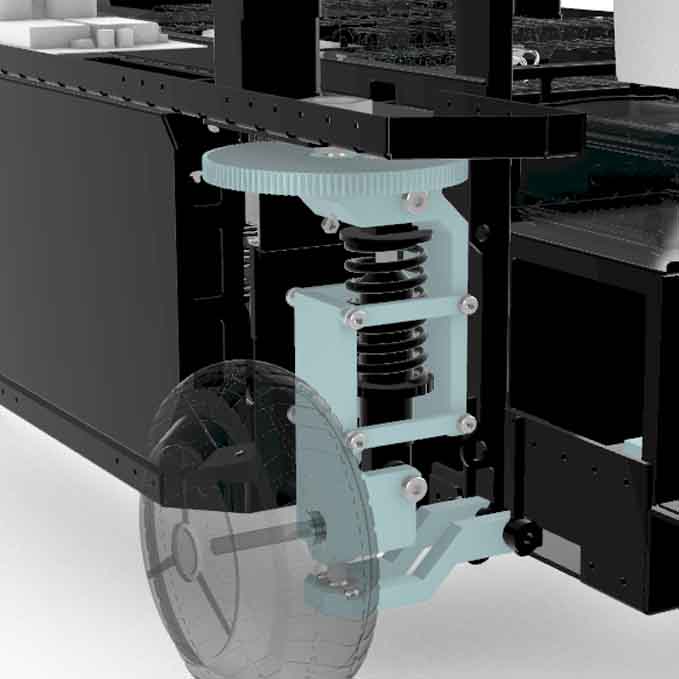

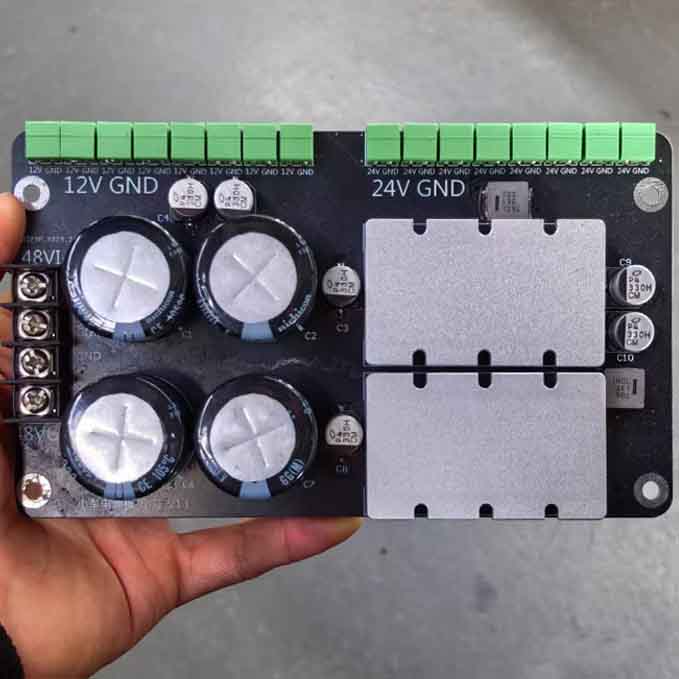

Testbed Vehicle

Developed during a period of frequent chassis failures, this testbed provided a stable platform for both algorithm validation and sensor experiments. Its independent steering and suspension support Ackermann steering, zero-radius turning, and crab-walking, while the modular top layer allows mounting different sensors for rapid prototyping. Slightly smaller than the full-scale vehicle, it can operate indoors, enabling convenient office-based testing.

Reuse & Future Work

Stepper motors and control logic were lately resued in the 4-Axis Robotic Arm, while the chassis remains available for perception and navigation testing as a versatile validation tool.