Robotic Arm Project

A versatile research platform for human-robot physical interaction, emphasizing emotional sensing, collaborative behaviors, and tactile feedback. Emotional sensing involves the robot's ability to detect and respond to human emotions, aiming for a more empathetic and intuitive interaction. Collaborative aspect is designed to engage to joint activities with humans, creating a sense of shared experience. Tactile feedback is a crucial feature that adds a physical dimension to interactions, making the robot appear more 'human' and relatable.

Interaction Robotic Eye Robotic ArmV0 | Research on Physical Interaction

- Date: 2023 NOV

- Type: Research Project

- Role: Researcher

- Description: Explore the need of physical interaction to alleviate the increasing societal stress and to promote emotional resilience and strengthen social connectivity.

Background

Rising stress and mental discomfort in the contemporary society have created a pressing need for innovative approaches to sustain mental health and emotional resilience. Traditional support systems - online strategies and community programs - often lack the immediacy or require significant resources. In response to these gaps, my effort focuses on human-robot interactions to facilitate physical connections, aiming to alleviate stress and gradually rebuild social contact. This research aims to introduce computer vision techniques for detecting subtle facial emotions; Investigate the impact of tactile feedback; And explore collaborative working in human-robot interactions.

V1 | 3-Axis Robotic Eye Powered by Servo Motors

- Date: 2023 DEC - 2024 FEB

- Type: Research Project

- Role: End to End

- SoftWare: Rhino, Pycharm

- HardWare: Servo Moto, 3D printing, etc.

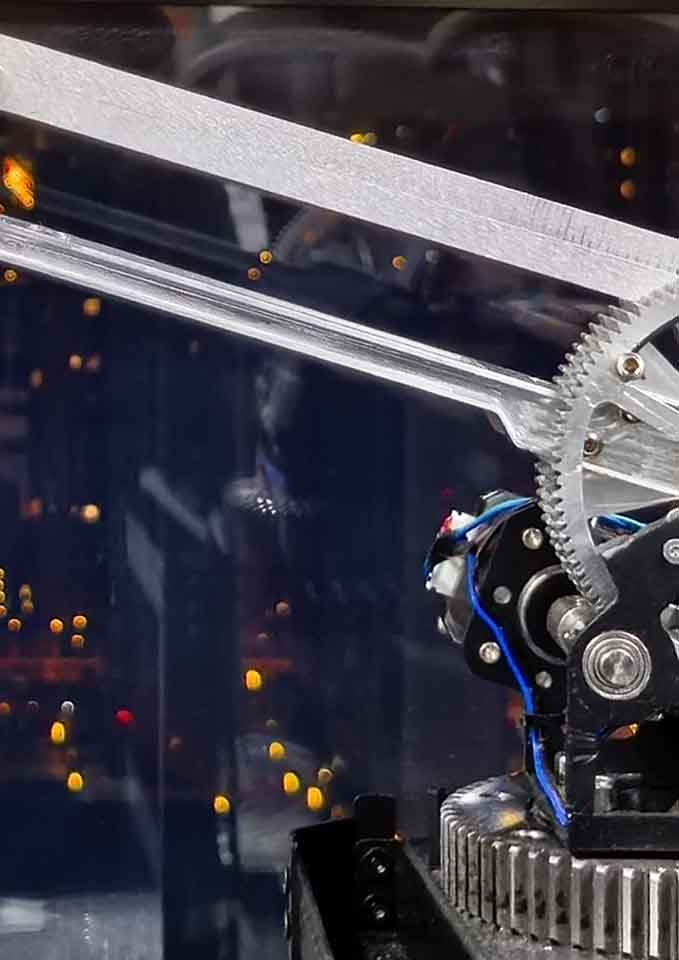

- Description: This version of robotic arm is powered by bus servos. To enhance the stability and reduce horizontal rotation friction, a relatively heavy bearing is used as the base support. Mounted at the tip of the arm is a wide-angle camera with a 127-degree horizontal field of view. This setup not only provides a broad field of vision but also supports touch feedback through current monitoring.

Early Versions: Early prototypes faced high friction in the horizontal rotation plate and motor burnout from an imbalanced head. To resolve this, I integrated a large bearing for reduced friction and added a counterweight at the arm's end to balance the secondary arm, ensuring smoother operation and preventing motor overload.

Detect, Track & Search

Current sensing capabilities of this interactive platform are primarily based on computer vision, enabling it to detect and track human presence. A pivotal feature is its ability to 'search' for humans when they move out of its field of vision. Initially, the robot could simply sense and track people, which lacked a certain depth of interaction, thus making it 'unhuman'. However, the introducing of the searching behavior made a leap in making the robot alive. This behavior of actively looking around when it loses track of a human, gives a more natural and living presence.

Touching Feedback

The touch feedback is coincidently achieved through the power control and current monitering of these motors, as they inherently do not provide torque feedback. When the robot is stationary, the motors are set to release tension, allowing them to be moved manually. During movement, if there is a significant rise in current, it indicates that the motion is obstructed. If the robot's position is physically altered, the human detection and tracking system will prompt the motors to return to their intended path, resulting in a responsive touch feedback experience.

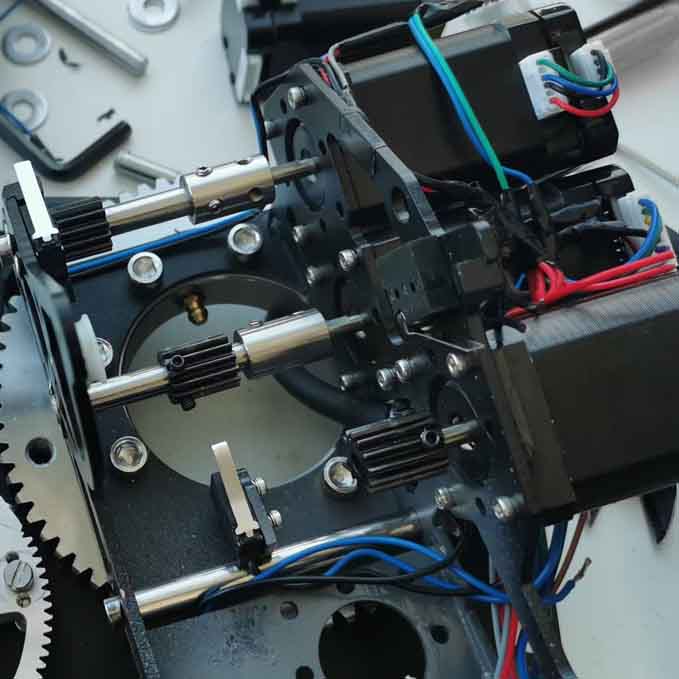

V2 | 4-Axis Self-Balancing Robotic Arm

- Date: 2024 NOV -

- Type: Research Project

- Role: End to End

- SoftWare: Pycharm

- HardWare: Stepper Motor, Al Machined Parts, Sheet Metal.

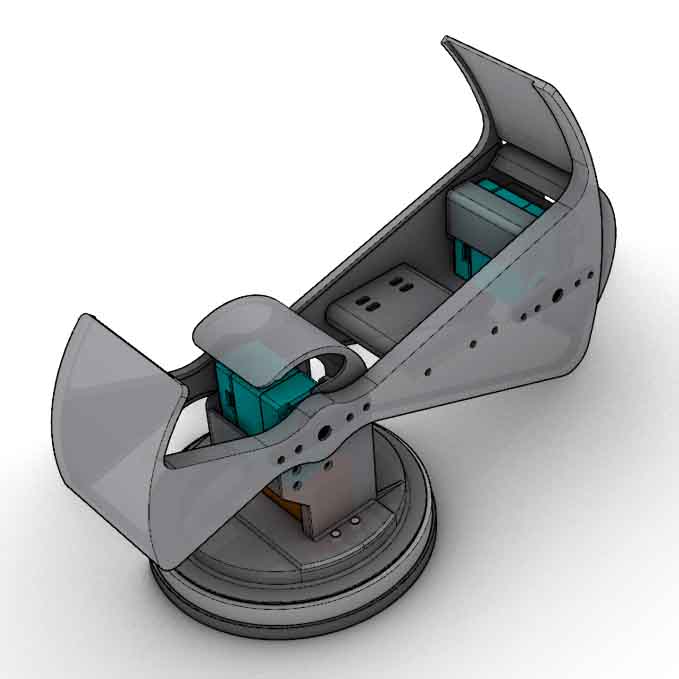

- Description: This robotic arm, based on the MK3 series, incorporates stepper motors for precise control and adds an additional degree of freedom at the end effector. A wire-spring counterweight system ensures passive balancing, reducing motor torque requirements while maintaining stability and safety.

Robotic Arm

Designed for potential outdoor use, this robotic arm prioritizes lightweight construction and safety. The focus is on minimizing moving-part weight while implementing a self-balancing mechanism to reduce motor torque requirements. By dynamically adjusting motion based on torque feedback, the system ensures user safety and protects the arm from damage during operation, combining mechanical efficiency with adaptive control.

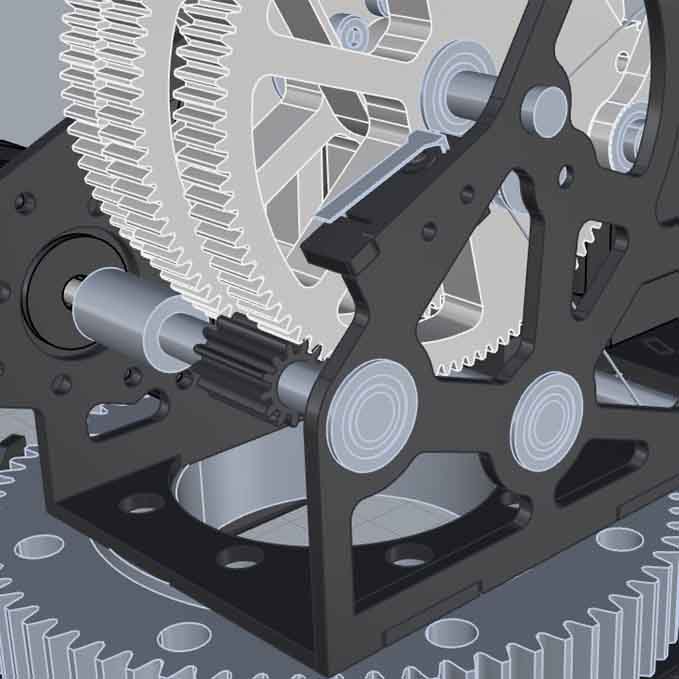

Tolerance Design: To mitigate assembly errors from machining tolerances, I designed shaft holes with +0.02mm clearance and motor screw holes with +0.2mm allowance for manual adjustment. However, the sheet metal frame's laser cutting and welding introduced unpredictable inaccuracies. To address this, I added alignment slots with +0.125mm tolerance, ensuring precise component fitting.

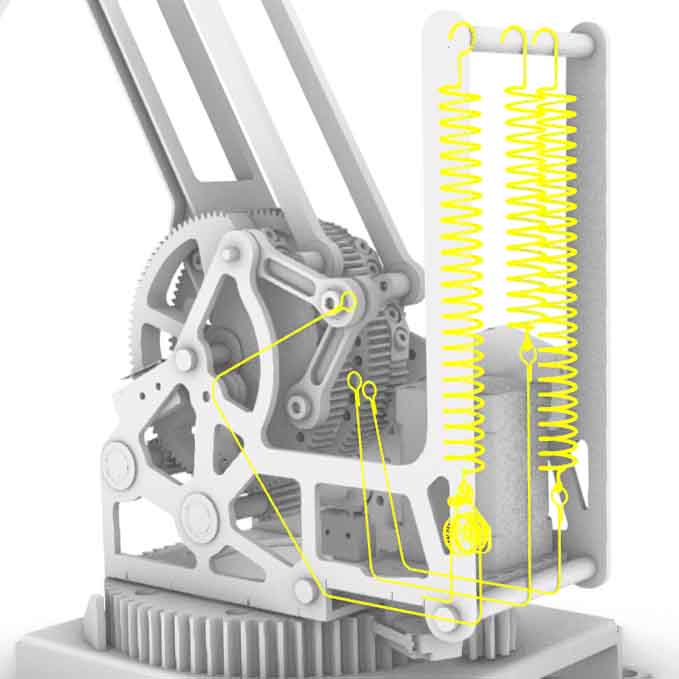

Counterweight balancing: To achieve gravity-based self-balancing, I utilized the Kangaroo module in Rhino's Grasshopper plugin to simulate torque distribution across the robotic arm's joints under a 1kg load at various angles. Based on these simulations, I designed a hybrid tension system combining wires, linkages, pulleys, and springs. By calculating required spring forces and displacements, I reverse-engineered the optimal spring specifications models for dynamic equilibrium.

Counterweight Balancing Simulation

Stepper Motor: The stepper motors are compactly arranged on one side of the base, with shafts extended via couplings to connect to corresponding axes. Each motor features a unique ID, enabling TTL/CAN bus control to minimize cable clutter and streamline communication.

Motion Test

Each axis is equipped with a limit switch for homing and physical stoppers to prevent the robotic arm from exceeding its designed motion range.

Current Progress

After developing basic control algorithms, this system is suspended and will be continue to move on after the Auto Patrol project become stable.

V3 | Future

Sensing & Feedback

Following the completion of motion control development, the next phase focuses on enhancing perception and adaptive motion models. This aims to create a shared experience between the robot and users, enhancing the sense of collaboration and connection through responsive, context-aware interactions.